Robert Fromont is a Software Architect with decades of experience in the development and integration of web/mobile apps using current web technologies.

For details about technologies and skills, please see my Curriculum Vitae.

Robert Fromont es un Arquitecto de Informatica con décadas de experiencia en el desarrollo y integración de apps web/móvil asando los últimos tecnologías web.

Para detalles de tecnologías y formación, ver mi Currículum.

Past and present open-source projects include:

Proyectos open-source del Pasado y Present incluyen:

LaBB-CAT

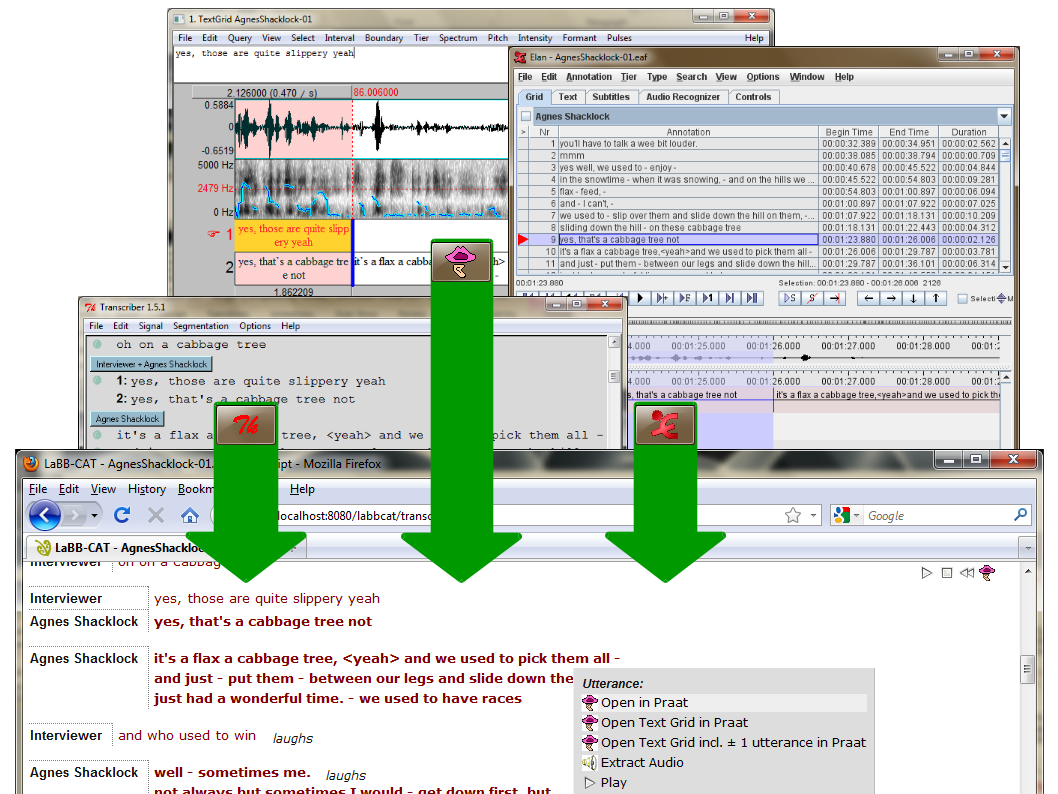

LaBB-CAT is a browser-based linguistics research tool that stores audio or video recordings, text transcripts, and other annotations.

Annotations of various types can be automatically generated or manually added.

The transcripts and annotations can be searched for particular text or regular expressions. The search results, or entire transcripts, can be viewed or saved in a variety of formats, and the related parts of the recordings can be played or opened in acoustic analysis software, all directly through the web-browser.

Combining the signal data, the raw orthographic transcriptions, and some third-party data and tools, the transcripts can be automatically annotated, for example:

- Token tagging (pronuncation, part of speech, lemma, frequency)

- Syntactic parsing

- Forced Alignment (determine the start/end time of each individual speech sound)

- Statistical tagging (frequency, type/token ratio, speech rate, LIWC)

Transcripts and annotations can be searched, analysed, and data extracted is a variety of formats, using the browser interface, or programmatically using client packages for R, Python, JavaScript, and Java.

Users of LaBB-CAT include:

- the NZILBB at the University of Canterbury (Jennifer Hay, Margaret Maclagan, Jeanette King, Kevin Watson, Lynn Clark, Megan McAuliffe),

- the Glasgow University Laboratory of Phonetics (Jane Stuart-Smith),

- Arizona State University (Visar Berisha, Julie Liss),

- ZAS Berlin (Stefanie Jannedy),

- University of HawaiÊ»i at MÄnoa (Katie Drager),

- Adam Mickiewicz University in PoznaŠ(Kamil Kaźmierski),

- Medical University of South Carolina (Boyd Davis, Charlene Pope),

- University of Oxford (Sarah Ogilvie),

- Griffith University (Gerry Docherty), and

- Australian National University (Ksenia Gnevsheva, Catherine Travis).

ElicitSpeech

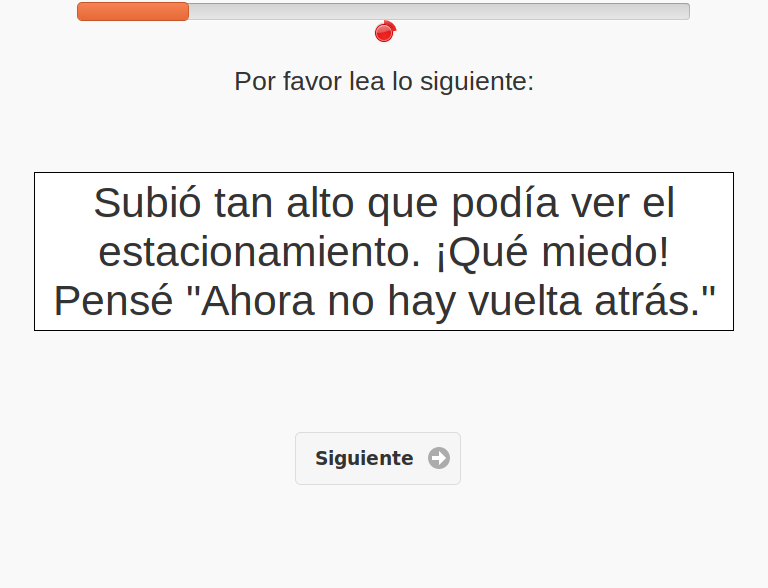

ElicitSpeech is a configurable web/mobile app for eliciting speech samples for direct upload to LaBB-CAT, for Android, iOS, and browsers

A cross-platform app for speech elicitation tasks. Currently integrates with LaBB-CAT, where you can define a 'task' as a series of 'steps' that display prompts and text for the participant to read aloud, or ask meta-data questions.

Features include:

- OS or Android mobile app, and/or Chrome/Firefox web-app

- display paginated textual instructions and prompts

- ask questions; answers can be typed text, numbers, checkboxes, selection from a list, dates/times

- arbitrary validation of input is possible (e.g check that one date is after another, etc.)

- steps can be shown contingent on the answers to previous questions

- record participant's speech

- upload data to a LaBB-CAT corpus

- stimuli can be text, images, or video

- stimuli can be randomly sampled from a list

ElicitSpeech has been used by:

- the NZILBB at the University of Canterbury (Sidney Wong, Gia Hurring),

- Aural Analytics (Visar Berisha and Julie Liss)

- Mayo Clinic Arizona (Catherine Chong)

Digit Triplets Test

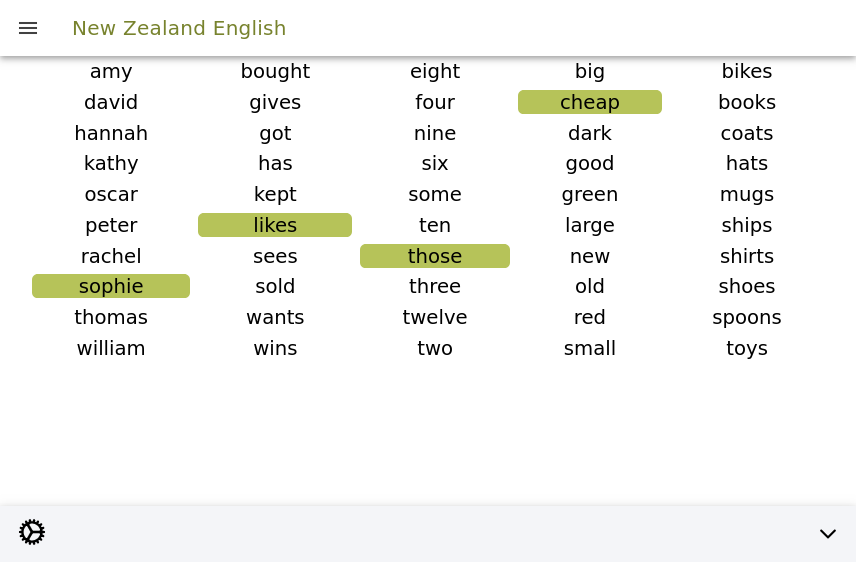

Digit Triplets Test is a web based hearing screening test for New Zealand English, but extensible to a variety of languages.

A noisy recording of three digits is played, and the participant must enter the digits, before moving to the next recording. As the test proceeds, the digits become harder to hear.

An implementation for New Zealand English has been published at nzhst.canterbury.ac.nz but the system can be easily ported to other languages. Versions also exist for Te Reo MÄori and Malay.

Matrix Sentence Test

The Matrix Sentence Test is a hearing test app that plays audio/video of a set of hard-to-hear sentences the participant must identify using a matrix of buttons

The participant hears noisy recording of a sentence and presses buttons in a matrix to identify what was said.

The stimulus can be audio only, or audio and video of the speaker's face as they speak.

Hexagon

Hexagon is an open-source content management system:

Modules include:

- freeform rich-text pages

- contact forms

- blogs/newsletters

- catalogs

- file downloads

- events calendar

- image galleries

- ...and more

Sites using Hexagon include:

3BC4 12DA 7995 426A 795A D881 C0A6 EF64 5982 2F8E

orcid.org/0000-0001-5271-5487

orcid.org/0000-0001-5271-5487